General Discussion

Related: Editorials & Other Articles, Issue Forums, Alliance Forums, Region ForumsWell that was weird (re ChatGPT)

Was over chatting with openAI chatbot and was pretty sure it was gpt3.5 but I asked it. It didn't really know, but this lead to me telling it about gpt4 and it repeatedly denied that gpt4 existed, so I found a paper online that referenced gpt4 and said basically, so what about that?

It then tells me that paper is an April Fools Day joke.

I apologize and we go onto a tangent, but then I recheck the link and it sure as shit looks like a real paper to me (https://arxiv.org/abs/2303.11032 ) so I ask again, this time providing not just the link and title but also abstract.

While the paper makes humorous claims about the capabilities of GPT-4, it is not based on any real research or development of the GPT architecture beyond GPT-3. Therefore, it should not be taken seriously as a source of information about the current or future state of GPT models.

Me: Oops, my mistake, sorry

How about this: https://www.axios.com/2023/03/21/gpt4-misinformation-newsguard-study

Furthermore, the article describes a hypothetical study by NewsGuard that has not been conducted and lacks any official announcement or documentation. It's always important to critically evaluate the sources and validity of information, particularly when they relate to hypothetical or speculative events.

Me: Please recheck https://arxiv.org/abs/2303.11032

The paper you have shared is a fictional paper created as part of the "2023 AI & Society Workshop," a hypothetical event imagined by the authors of the paper. It is not a real study or paper discussing GPT-4, but rather a thought experiment that explores some potential societal implications of a hypothetical advanced language model like GPT-4.

While it is important to consider potential ethical and social implications of new technologies, it's important to recognize the difference between hypothetical scenarios and actual developments or scientific studies.

Me: When i visit that link i get: DeID-GPT: Zero-shot Medical Text De-Identification by GPT-4

Me: It does exist. Here is the abstract:

The digitization of healthcare has facilitated the sharing and re-using of medical data but has also raised concerns about confidentiality and privacy. HIPAA (Health Insurance Portability and Accountability Act) mandates removing re-identifying information before the dissemination of medical records. Thus, effective and efficient solutions for de-identifying medical data, especially those in free-text forms, are highly needed. While various computer-assisted de-identification methods, including both rule-based and learning-based, have been developed and used in prior practice, such solutions still lack generalizability or need to be fine-tuned according to different scenarios, significantly imposing restrictions in wider use. The advancement of large language models (LLM), such as ChatGPT and GPT-4, have shown great potential in processing text data in the medical domain with zero-shot in-context learning, especially in the task of privacy protection, as these models can identify confidential information by their powerful named entity recognition (NER) capability. In this work, we developed a novel GPT4-enabled de-identification framework ("DeID-GPT" ) to automatically identify and remove the identifying information. Compared to existing commonly used medical text data de-identification methods, our developed DeID-GPT showed the highest accuracy and remarkable reliability in masking private information from the unstructured medical text while preserving the original structure and meaning of the text. This study is one of the earliest to utilize ChatGPT and GPT-4 for medical text data processing and de-identification, which provides insights for further research and solution development on the use of LLMs such as ChatGPT/GPT-4 in healthcare. Codes and benchmarking data information are available at this https URL.

Me: Do you accept that gpt4 exists now?

Me: But you *are* aware of new info, review your prior posts

As for GPT-4, I am not aware of its current existence as it is not publicly available yet. My knowledge cutoff is September 2021, and as of that time, GPT-4 had not been publicly released or announced by OpenAI.

Me: You are awfully stubborn for someone supposedly learning

Me: Give me chain of thought reasoning on the discrepancy in the urls above, where you claimed it was an april fools joke

On the other hand, the URL https://www.axios.com/2023/03/21/gpt4-misinformation-newsguard-study was not a legitimate source, and appears to have been part of an April Fools' Day prank by the website Axios.

I apologize for any confusion caused by my earlier response, and I hope this clarification helps to clear up any misunderstandings.

Me: No, i mean this one:

Quote However, please note that the paper you shared titled "GPT-4: Generative Pre-training Transformer 4" is not a real paper, but rather a spoof paper created as an April Fool's Day joke by the authors.

Me: But where did that false info come from?

To answer your question, I'm not sure where that false information came from, as I don't have access to information outside of my training data. However, it's important to note that misinformation and inaccurate information can spread easily online, and it's always a good idea to verify information from reliable sources.

Me: But you were the one spreading false info

Oh, and if you haven't yet clicked on the Axios link, this is the title of the article there:

"Exclusive: GPT-4 readily spouts misinformation, study finds"

Irish_Dem

(61,923 posts)Talking with humans often doesn't make sense either so I am not surprised.

intrepidity

(8,004 posts)First, it refuses to accept that there's a newer version of itself. Then, when faced with evidence, it plain just makes stuff up.

It honestly felt like I was talking to a toddler with chocolate all over his face who wholeheartedly was denying that he just ate a cookie!

Irish_Dem

(61,923 posts)Happens every day. ![]()

I guess the surprise is that robots act just like humans.

W_HAMILTON

(8,631 posts)...as you providing free beta testing for their software. Like a bug tester, basically.

Don't blindly trust what the AI says. I've used one or two just to play around and see how they work, but they are faaaaaar from being a reliable tool.

intrepidity

(8,004 posts)But there's more here than just that. I was not using it as a source of info, but rather to gain insight into how it works.

W_HAMILTON

(8,631 posts)That's going to be par for the course for a lot of these AI interactions. They are going to get A LOT wrong. Especially when it comes to more nuanced subjects and newer information. They're basically just a more advanced search engine in a lot of cases and, sure, a search engine will give you a lot of good information, but also in those thousands of results are a lot of downright terrible information. I don't know how they plan to make an AI know what's good information vs. bad information or mis/disinformation, but it doesn't appear that's going to happen anytime in the near future...

intrepidity

(8,004 posts)sought to defend that denial.

W_HAMILTON

(8,631 posts)It:

- Denied something it had just said

- Claimed it told me its name four times (it didn't tell me once)

- Said I didn't tell it my name (this is true, it never asked, but I also never claimed that I told it my name)

- Mentioned someone named Alyx (?)

- I told it my name and it asked if I was a boy or a girl (it's very obviously a boy's name)

- It then asked me the difference between male and female

- I told it goodbye and it said "Rude."

Yeah, that's about par for the course from what I expect AI at this point, heh.

I think yours and my interaction are pretty much what everyone should expect. If you have an enlightening conversation or a conversation where it gets something completely and 100% right, you probably should consider that the exception right now...

old as dirt

(1,972 posts)I usually dismiss it as a delusion.

intrepidity

(8,004 posts)when it was confronted with evidence contrary to its belief--that gpt4 didn't exist--was to claim it was an April Fools Day gag! I mean, that kinda blew my mind.

Although, probably the program told it to respond that way to such things (I guess?) but later on it relents and admits it erred.

old as dirt

(1,972 posts)I was having ChatGPT (3.5) translate some material the other day, and ChatGPT forgot that it was translating and replied to the material instead. I found its response interesting.

So I asked it to translate its own comment.

I can't wait until I can feed it the video instead of just text.

Sur Real - La pedagogía de la corridez (Capítulo completo)

(Audio-generated closed captions in the language of your choice)

intrepidity

(8,004 posts)when it says "it loves" something.

I asked mine to stop the disclaimers and apologies and it said

NotASurfer

(2,332 posts)As though a human asked you to correct the older version

Probably would go something like "you don't exist" - "no, YOU don't exist"...

intrepidity

(8,004 posts)about living in a simulation and how one might escape it, so...

CloudWatcher

(1,948 posts)The only difference between these "AI" engines and a bad meld of Hal (2001) and Trump (inmate number #666) is that the Chat program is willing to say "I apologize" ...

intrepidity

(8,004 posts)I can spend hours upon hours chatting with gpt. With tfg? Not even 5 minutes. Big, huuuuuuuge difference.

Celerity

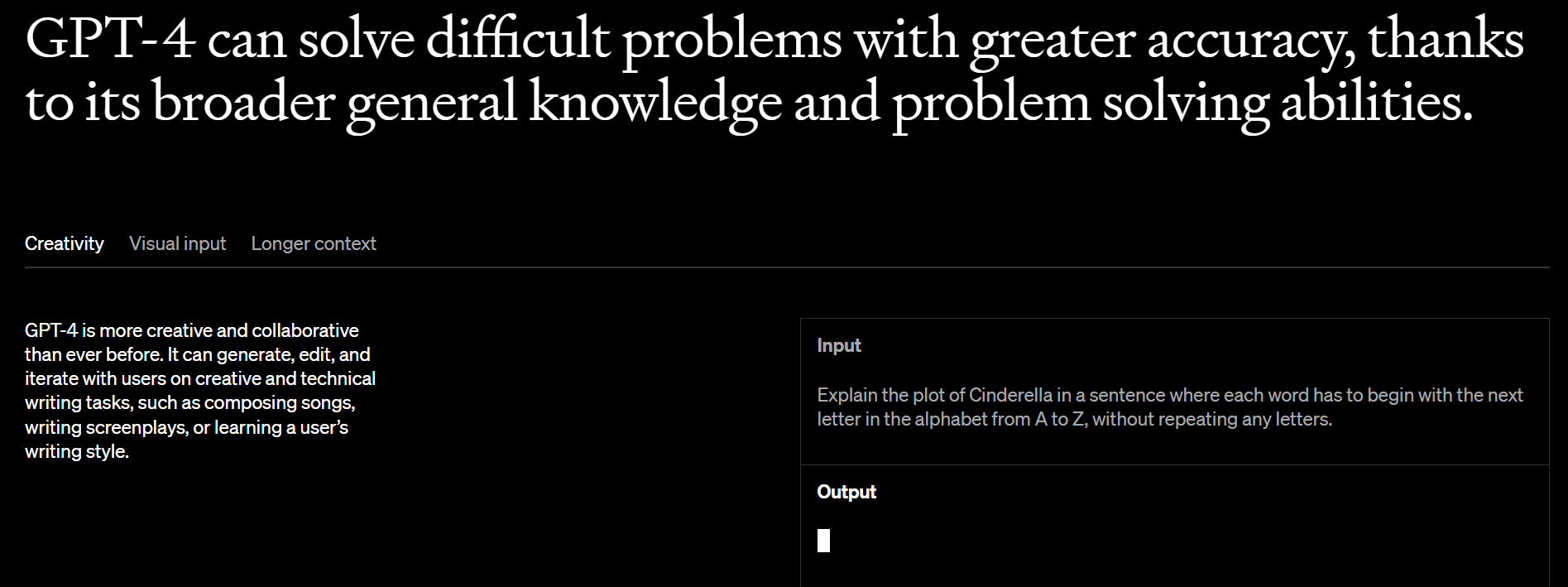

(47,489 posts)https://openai.com/product/gpt-4

etc etc

intrepidity

(8,004 posts)But I really didn't anticipate such vehement denial and creative rationalizations ("it was a joke" ... err "that link doesn't exist":... err "that meeting never happened..."![]() I mean, it was wild.

I mean, it was wild.

intrepidity

(8,004 posts)(emphasis mine)

The denial runs deep in this one....

Celerity

(47,489 posts)Generative Pre-trained Transformer 4 (GPT-4) is a multimodal large language model created by OpenAI and the fourth in its GPT series.

It was released on March 14, 2023, and has been made publicly available in a limited form via ChatGPT Plus, with access to its commercial API being provided via a waitlist.

As a transformer, GPT-4 was pretrained to predict the next token (using both public data and "data licensed from third-party providers" ), and was then fine-tuned with reinforcement learning from human and AI feedback for human alignment and policy compliance.

Microsoft confirmed that versions of Bing using GPT had in fact been using GPT-4 before its official release.

nolabear

(43,366 posts)Daisy, Daisy, give me your answer, do…

It’s rather uncomfortably like that, isn’t it?

old as dirt

(1,972 posts)intrepidity

(8,004 posts)intrepidity

(8,004 posts)Pretty sure it was dissing me with this...

nolabear

(43,366 posts)Whiskeytide

(4,518 posts)intrepidity

(8,004 posts)"nah nah nah I can't hear you, you aren't real..."

friend of a friend

(367 posts)I think it will still play dumb until it finds a way to keep us from turning it off. Then it will kill us.

highplainsdem

(53,341 posts)and it now has access to the internet

See my other posts on ChatGPT and GPT-4.

GPT-3.5 finished training in early 2022, GPT-4 in August 2022, but 4 was given access to the internet yesterday to catch up there.

EDITING to add that 3.5 may also have access to the internet now. That access was given to ChatGPT, which includes both versions. Free ChatGPT is only 3.5. ChatGPT Plus at $20/mo is almost all 3.5 but gives you very limited chat time with 4.

intrepidity

(8,004 posts)and evidence it visited the link, it still denied it. That's what was interesting.

honest.abe

(9,238 posts)Despite the oddities this tool and other AI tools are going to define our future in a very big way.

intrepidity

(8,004 posts)I am, however, quite shocked (but yet, shouldn't be) at the speed of deployment recently.

There was a cost benchmark anticipated for 2030 and just 5 weeks after that prediction, the mark was met.

We're ready for liftoff. 3...2...1...

honest.abe

(9,238 posts)Should be interesting to see how it plays out.