Automobile Enthusiasts

Related: About this forumNTSB: Tesla Autopilot, distracted driver caused fatal crash

Source: Associated Press

By TOM KRISHER

February 25, 2020

WASHINGTON (AP) — Tesla’s partially automated driving system steered an electric SUV into a concrete barrier on a Silicon Valley freeway because it was operating under conditions it couldn’t handle and because the driver likely was distracted by playing a game on his smartphone, the National Transportation Safety Board has found.

The board made the determination Tuesday in the fatal crash, and provided nine new recommendations to prevent partially automated vehicle crashes in the future. Among the recommendations is for tech companies to design smartphones and other electronic devices so they don’t operate if they are within a driver’s reach, unless it’s an emergency.

Chairman Robert Sumwalt said the problem of drivers distracted by smartphones will keep spreading if nothing is done.

“If we don’t get on top of it, it’s going to be a coronavirus,” he said in calling for government regulations and company policies prohibiting driver use of smartphones.

Much of the board’s frustration was directed at the National Highway Traffic Safety Administration and to Tesla, which have not acted on recommendations the NTSB passed two years ago. The NTSB investigates crashes but only has authority to make recommendations. NHTSA can enforce the advice, and manufacturers also can act on it.

-snip-

Read more: https://apnews.com/d03d88fca7ef389ffbe3469f50e36dcf

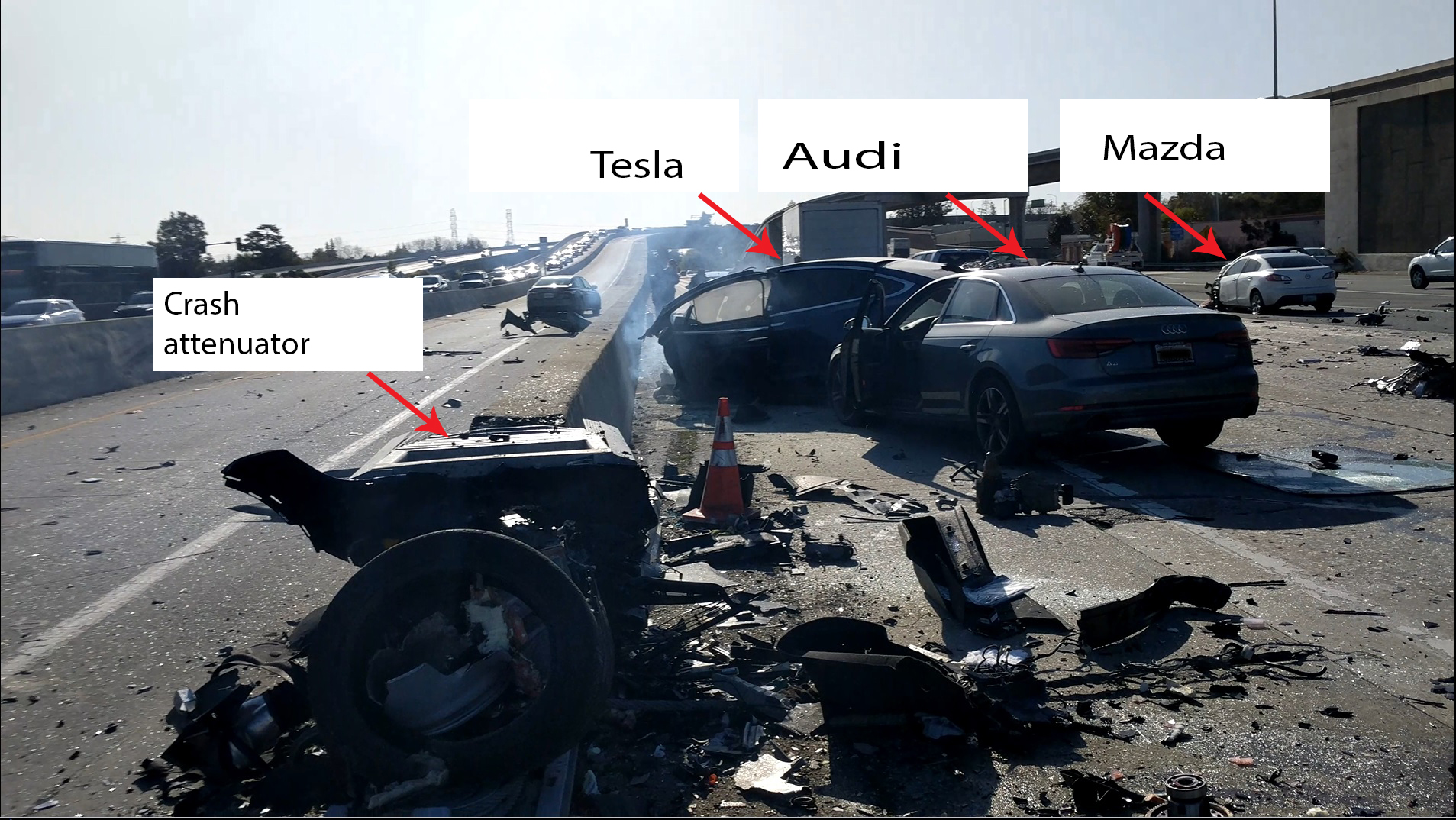

FILE - In this March 23, 2018, file photo provided by KTVU, emergency personnel work a the scene where a Tesla electric SUV crashed into a barrier on U.S. Highway 101 in Mountain View, Calif. The National Transportation Safety Board says the driver of a Tesla SUV who died in a Silicon Valley crash two years ago was playing a video game on his smartphone at the time. Chairman Robert Sumwalt said at the start of a hearing Tuesday, Feb. 25, 2020 that partially automated driving systems like Tesla's Autopilot cannot drive themselves. Yet he says drivers continue to use them without paying attention. (KTVU-TV via AP, File)

______________________________________________________________________

Source: National Transportation Safety Board

National Transportation Safety Board Office of Public Affairs

Tesla Crash Investigation Yields 9 NTSB Safety Recommendations

02/25/2020

WASHINGTON (Feb. 25, 2020) — The National Transportation Safety Board held a public board meeting Tuesday during which it determined the probable cause for the fatal March 23, 2018, crash of a Tesla Model X in Mountain View, California.

Based on the findings of its investigation the NTSB issued a total of nine safety recommendations whose recipients include the National Highway Traffic Safety Administration, the Occupational Safety and Health Administration, SAE International, Apple Inc., and other manufacturers of portable electronic devices. The NTSB also reiterated seven previously issued safety recommendations.

The NTSB determined the Tesla “Autopilot” system’s limitations, the driver’s overreliance on the “Autopilot” and the driver’s distraction – likely from a cell phone game application – caused the crash. The Tesla vehicle’s ineffective monitoring of driver engagement was determined to have contributed to the crash. Systemic problems with the California Department of Transportation’s repair of traffic safety hardware and the California Highway Patrol’s failure to report damage to a crash attenuator led to the Tesla striking a damaged and nonoperational crash attenuator, which the NTSB said contributed to the severity of the driver’s injuries.

“This tragic crash clearly demonstrates the limitations of advanced driver assistance systems available to consumers today,” said NTSB Chairman Robert Sumwalt. “There is not a vehicle currently available to US consumers that is self-driving. Period. Every vehicle sold to US consumers still requires the driver to be actively engaged in the driving task, even when advanced driver assistance systems are activated. If you are selling a car with an advanced driver assistance system, you’re not selling a self-driving car. If you are driving a car with an advanced driver assistance system, you don’t own a self-driving car,” said Sumwalt.

“In this crash we saw an overreliance on technology, we saw distraction, we saw a lack of policy prohibiting cell phone use while driving, and we saw infrastructure failures that, when combined, led to this tragic loss. The lessons learned from this investigation are as much about people as they are about the limitations of emerging technologies,” said Sumwalt. “Crashes like this one, and thousands more that happen every year due to distraction, are why “Eliminate Distractions” remains on the NTSB’s Most Wanted List of Transportation Safety Improvements,” he said.

The 38-year-old driver of the 2017 Tesla Model X P100D electric-powered sport utility vehicle died from multiple blunt-force injuries after his SUV entered the gore area of the US-101 and State Route 85 exit ramp and struck a damaged and nonoperational crash attenuator at a speed of 70.8 mph. The Tesla was then struck by two other vehicles, resulting in the injury of one other person. The Tesla’s high-voltage battery was breached in the collision and a post-crash fire ensued. Witnesses removed the Tesla driver from the vehicle before it was engulfed in flames.

The NTSB learned from Tesla’s “Carlog” data (data stored on the non-volatile memory SD card in the media control unit) that during the last 10 seconds prior to impact the Tesla’s “Autopilot” system was activated with the traffic-aware cruise control set at 75 mph. Between 6 and 10 seconds prior to impact, the SUV was traveling between 64 and 66 mph following another vehicle at a distance of about 83 feet. The Tesla’s lane-keeping assist system (“Autosteer”) initiated a left steering input toward the gore area while the SUV was about 5.9 seconds and about 560 feet from the crash attenuator. No driver-applied steering wheel torque was detected by Autosteer at the time of the steering movement and this hands-off steering indication continued up to the point of impact. The Tesla’s cruise control no longer detected a lead vehicle ahead when the SUV was about 3.9 seconds and 375 feet from the attenuator, and the SUV began accelerating from 61.9 mph to the preset cruise speed of 75 mph. The Tesla’s forward collision warning system did not provide an alert and automatic emergency braking did not activate. The SUV driver did not apply the brakes and did not initiate any steering movement to avoid the crash.

The driver was an avid gamer and game developer. A review of cell phone records and data retrieved from his Apple iPhone 8 Plus showed a game application was active and was the frontmost open application on his phone during his trip to work. The driver’s lack of evasive action combined with data indicating his hands were not detected on the steering wheel, is consistent with a person distracted by a portable electronic device.

(In this graphic, the final rest locations of the Tesla, Audi and Mazda vehicles involved in the March 23, 2018, crash in Mountain View, California, are depicted. Photo courtesy of S. Engleman.)

-snip-

Read more: https://www.ntsb.gov/news/press-releases/Pages/NR20200225.aspx

4now

(1,596 posts)htuttle

(23,738 posts)The whole rush to self-driving cars has been scaring the bejeesus out of me. I have 28 years experience programming, and 18 of those years were spent designing telemetry, tracking and communications systems for the taxi industry. Before that, I spent 10 years behind the wheel driving in cities for 10-12 hours at a time, dodging the various obstacles in my path.

We're not ready for self-driving cars yet.

Even the really high-tech efforts, like the ones Google has invested in, have years to go before they are ready to unleash on the open streets. They might get it right 95% of the time right now, but that last 5% is why humans have to get licenses to drive.

I'm more interested in much better mass transit and personal (and package) transportation devices for the 'last mile'.

LisaM

(28,604 posts)I was almost hit by an electric scooter this morning, going to catch a bus (I was on the sidewalk, trying to get over to the right side after exiting my building). Users ride them on the sidewalks, they go way too fast, and they absolutely don't respect that pedestrians have the right of way.

PoliticAverse

(26,366 posts)should have chosen a different name - like "assistpilot".

Eugene

(62,658 posts)The "autopilot" was there to automate routine tasks, where a typical unassisted human would make about one error for each mile driven. It was the driver's responsibility to watch out for conditions the tech couldn't handle.

The NTSB singled out Tesla as being slower than other car makers in deterring driver misuse of the driver-assist "autopilot."

Even autonomous vehicles have remote human supervision, at least in principle.

discntnt_irny_srcsm

(18,578 posts)mcknzAlex

(10 posts)This is the reason why I never use that feature of the car, as a driver we need to stay focus on the road.